Research

This page provides a gist of the type of research that my students and I do. Please visit Publications for more activities.

Offline Reinforcement Learning

Reinforcement learning (RL) is one of the fastest-growing research areas in machine learning. An RL agent learns the underlying dynamics (system identification) and a decision policy to succeed in this environment (optimal control) simultaneously by "trials-and-errors" in an interactive environment. In the real life applications of RL, however, it is rarely possible to have online access to such an environment. Instead, the agent is often stuck with an offline dataset of historical interactions (by a human agent or a previous algorithm making decisions). Our research aims at developing algorithms that learn from offline data with provable statistical efficency. The outcome of the research could significantly reduce the overhead of using RL techniques in real-life sequential decision-making problems such as those in power transmission, personalized medicine, new material discoveries, computer networking and various forms of business decision making.

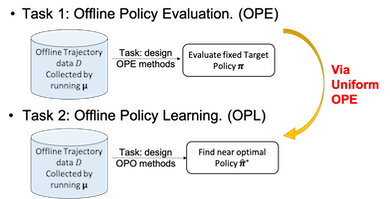

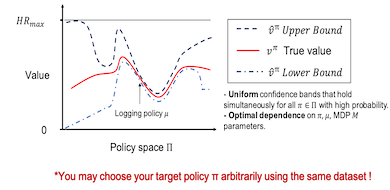

The two fundamental problems in offline RL are offline policy evaluation (OPE) or offline policy optimization / learning (OPO or OPL) (see the illustration above). We unify them via uniform convergence, with the long-term goal of developing a statistical learning theory for offline RL and to come up with exact optimal algorithm that makes more efficient use of the given data than any other algorithms in the universe (with the same input).

An expanding list of papers from my lab in offline RL are: [MIS for OPE,Tabular MIS, Uniform OPE, Uniform OPE++,Adaptive Offline Learning, Linear Function approximation]. More are coming (more generality, more provable optimal bounds, practical algorithms).

Adaptive Online Learning meets Trend Filtering

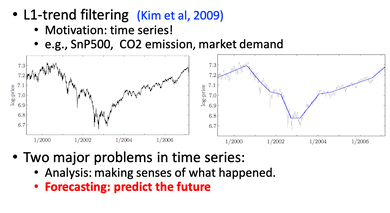

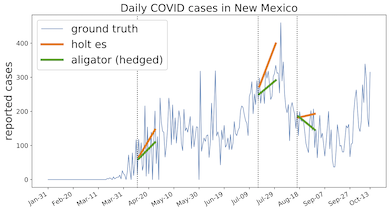

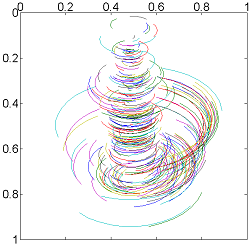

Trend filtering works great in time series analysis but does not apply to the time series forecasting problems. A recent line of work in my lab focuses on the intersection of trend filtering and adaptitve online learning, which reveals interesting new insights in both problems. Specifically, the estimation error can be written as a dynamic regret in online learning and local adpativity is closely related to strongly adaptivity in online learning.

On the one hand, this allows definitions and techniques in nonparametric regression to be introduced to online learning that results in natural ways of capturing unknown non-stationarity. This results in "fast rates" family with dynamic regrets of , , so on while adapting to unknown "path length". On the other hand, the online learning techniques produce new ways for nonparametric regression to (1) apply to forecasting problems, (2) to run more efficiently, (3) to avoid the need for choosing hyperparameters, e.g., regularization weights, and (4) to work without any stochastic assumptions.

Selected papers from this project include: [Arrows , AdaVAW, Aligator]. This paper addresses the TV1 problems in fully adversarial settings and won COLT'21 Best Student Paper.

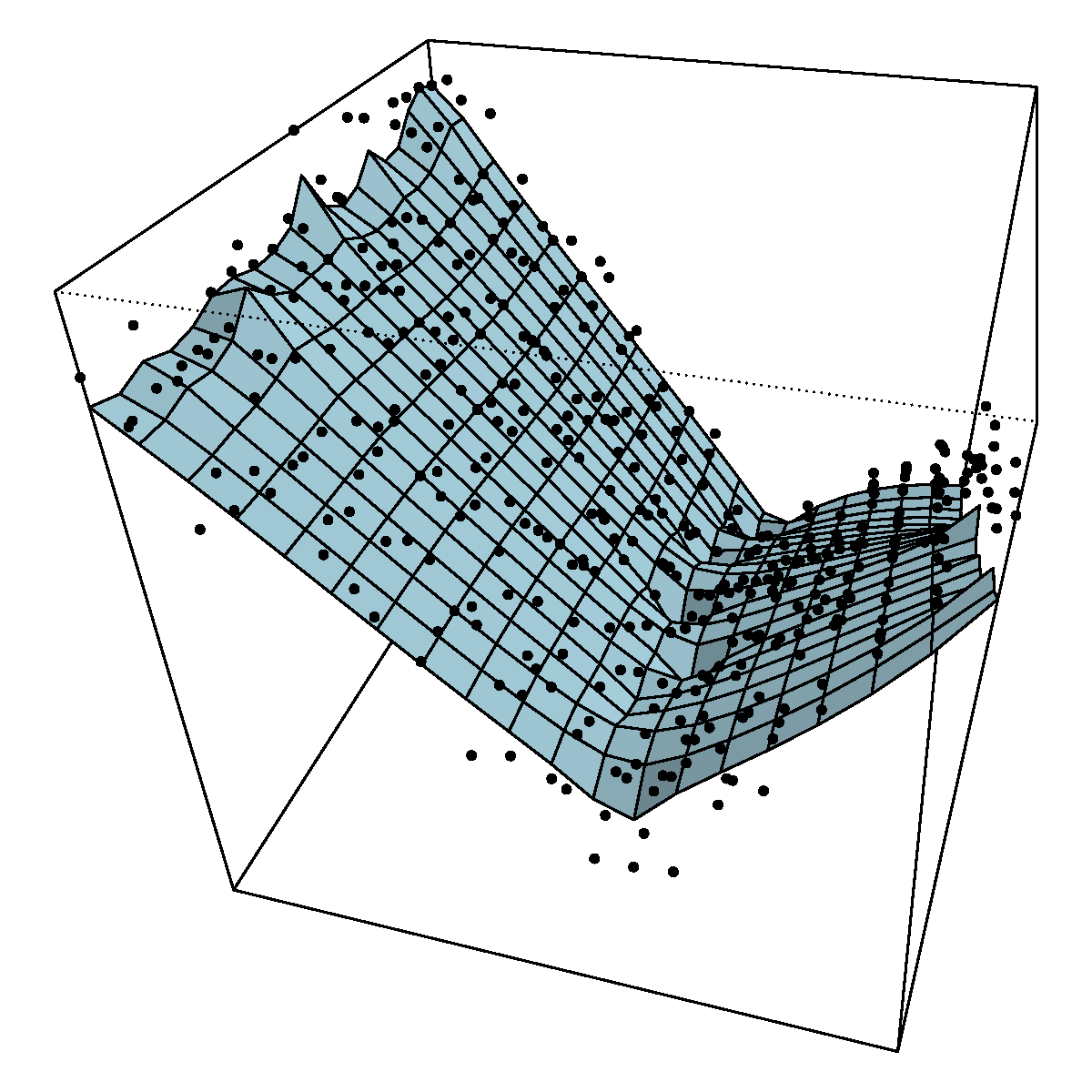

On the left of the figure above, we show the example from [Kim et al, 09] on applying L1 trend filtering to SnP500 data. On the right, we show an example of running our L1-Aligator on online trend estimation in forecasting COVID'19 hospitalization.

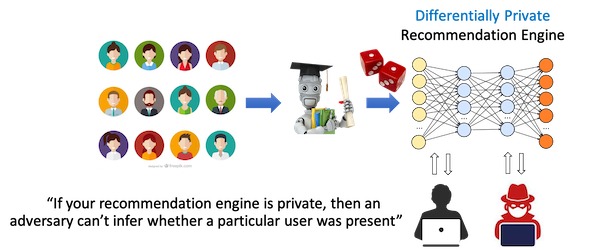

Practical Differential Privacy and Differentially Private Learning

Differential privacy (DP) is one of the most promising approaches towards addressing the privacy challenges in the era of artificial intelligence and big data. It represents a gold standard and is a key enabler in many applications including medical research, data sharing, training machine learning models with users' private data and their federated learning extensions. See the above illustration for an example of differentially private machine learning. Recently, DP is undergoing an exciting transition from science into technology. For instance, the US Census adopted differential privacy in 2020 Census; also, if you are sending text messages on your iPhone or browsing the web using Chrome, then chances are your data have been collected by Apple and Google using DP.

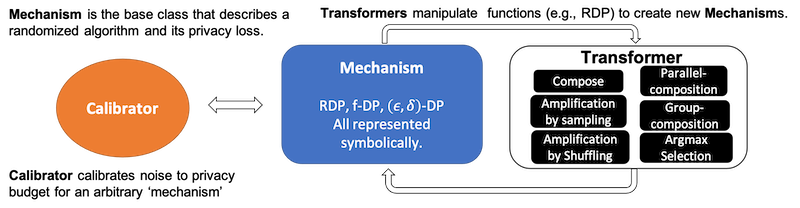

Our research in DP combines theory, algorithms with open source software to achieve the state-of-the-art privacy-utility tradeoff in many problems of interest. To make the algorithms more practical, we leverage data-dependent algorithm design, amplification by sampling, as well as RDP / f-DP based tighter privacy computation via our open source package: autodp. The above figure shows the new API of autodp, which enables flexible combination of existing building blocks while automating the computation of exact optimal privacy bounds.

Selected recent papers in DP includes: [subsampled RDP (AISTATS'19 Best Paper), Poisson-Sampled RDP , RDP for SVT, Per-instance DP, PrivateKNN]

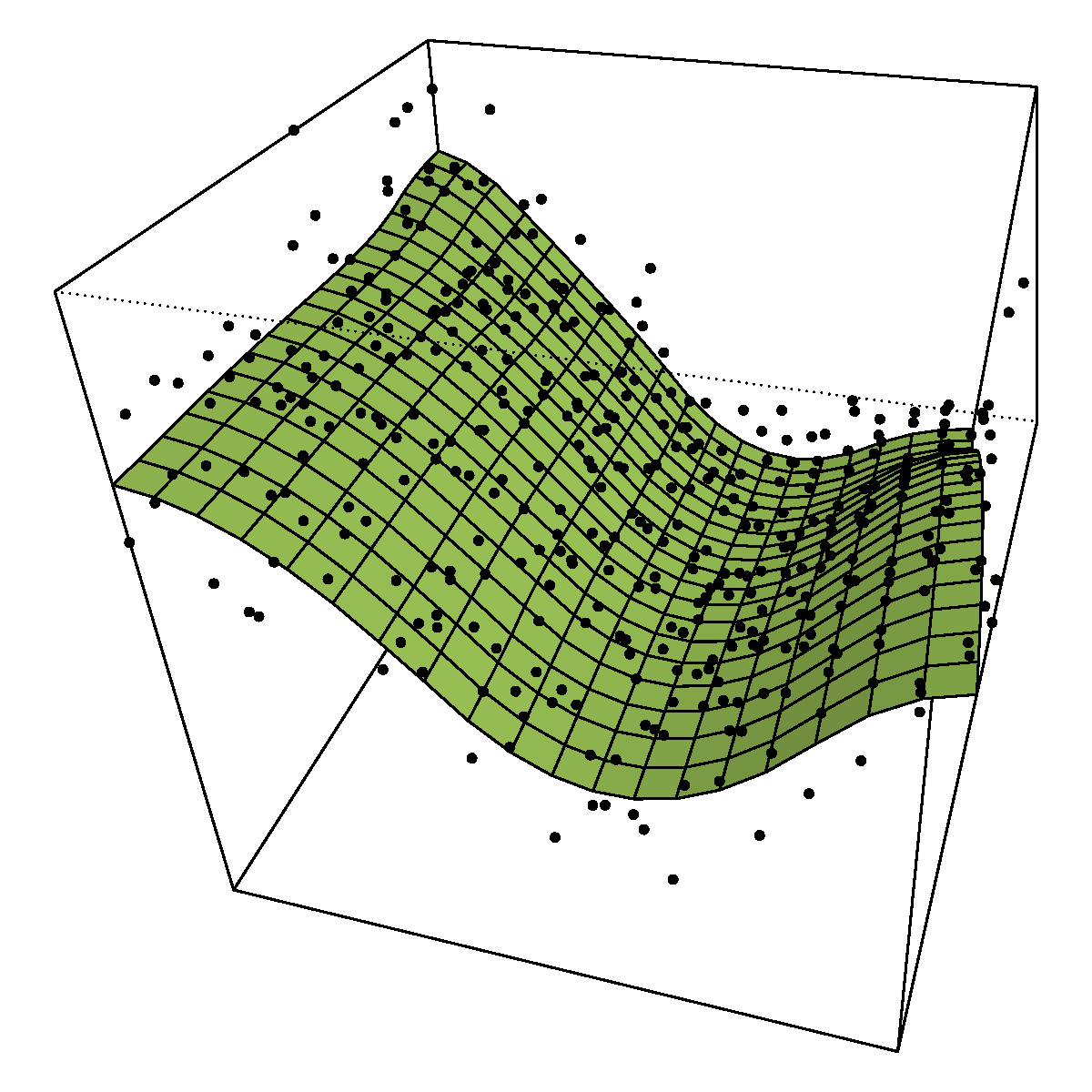

Adaptive Nonparametric Regression via Trend Filtering

Trend filtering is a new class of nonparametric regression methods with the property of being locally adaptive. In the univariate case, it behaves exactly the same as the locally adaptive smoothing splines [Mammen and Van de Geer, 1997], i.e., minimax optimal for the classes of functions with hetereogeneous smoothness; computationally it is way faster than its spline cousin.

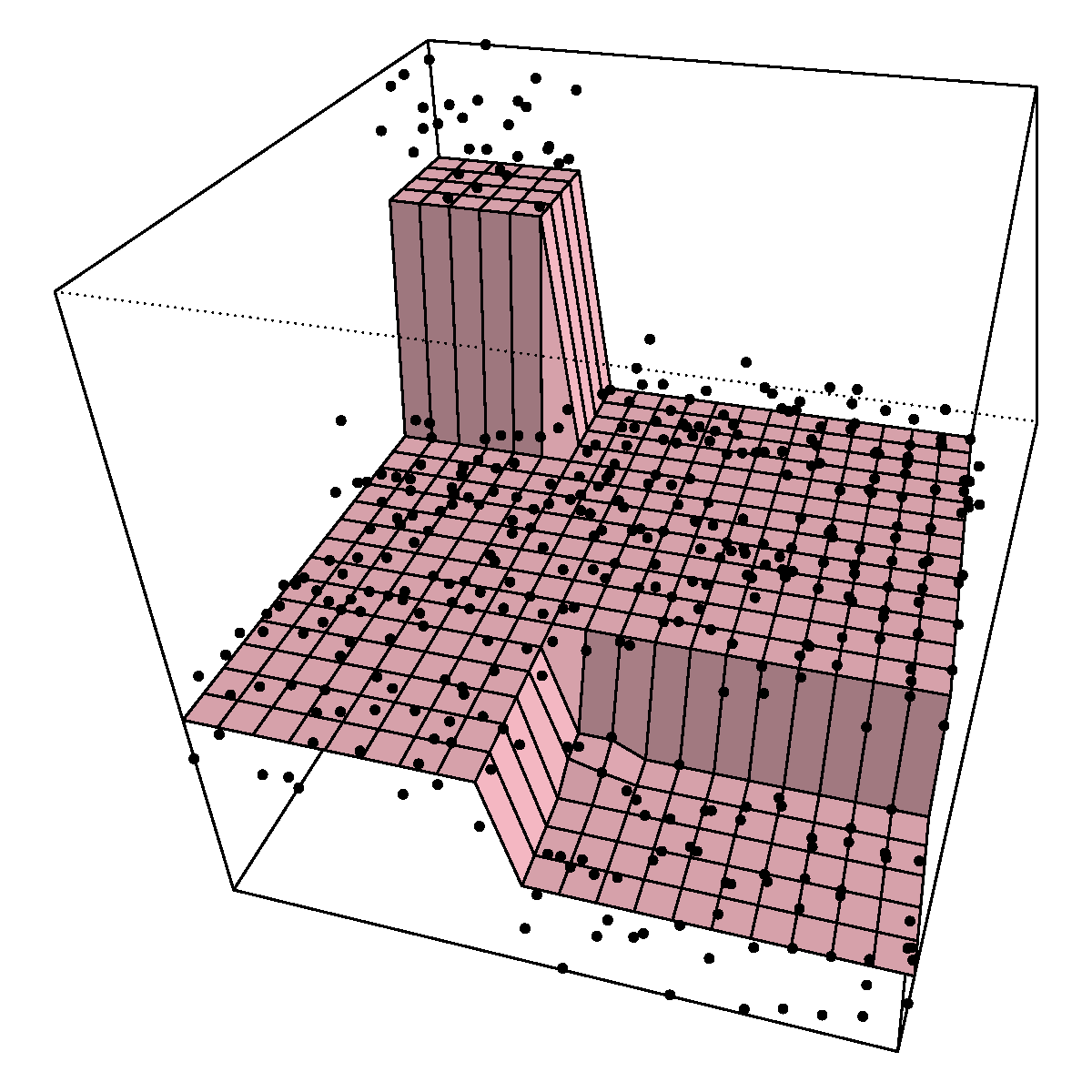

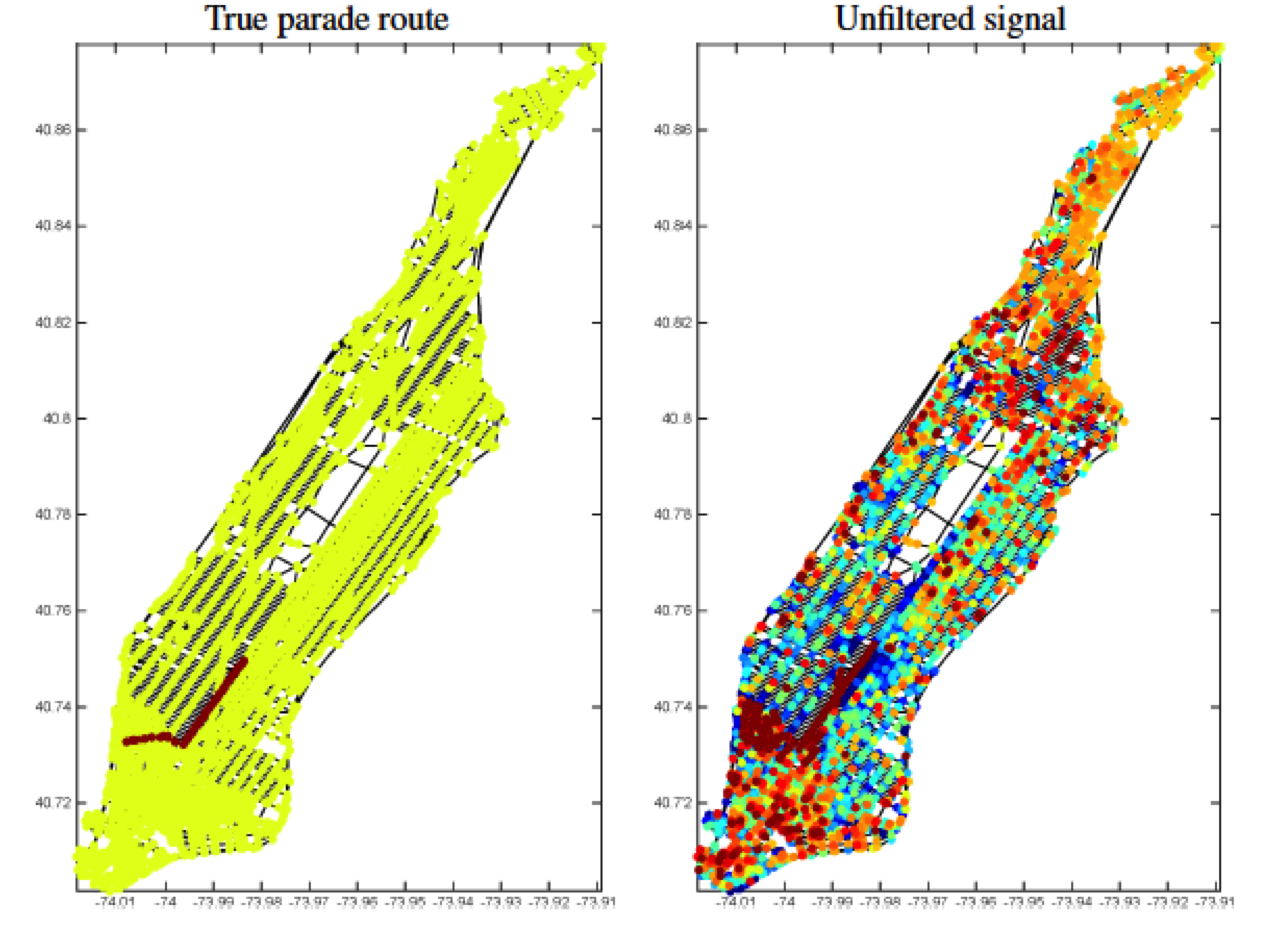

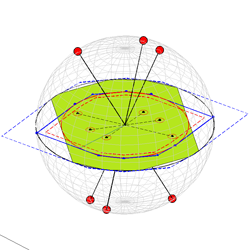

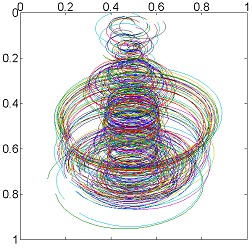

Graph trend filtering (GTF) is designed to filter signals obsesrved on the vertices of a graph with hetereogeneous smoothness. The above illustrations are examples of running GTF with k=0, k=1 and k=2, on 2D lattice grids, which induces piecewise constant, piecewise linear and piecewise quadratic structures on the filtered signal over a graph.

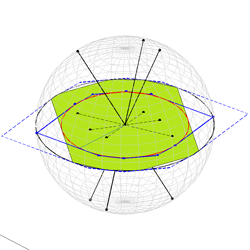

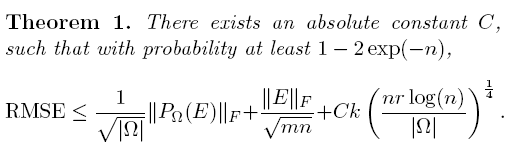

Theoretical Foundation for Noisy Sparse Subspace Clustering

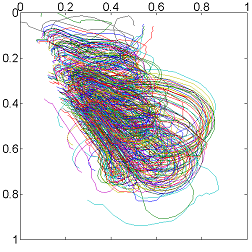

Left:Noiseless SSC illustration. Right:Noisy SSC illustration.

This is an geometric condition for the exact separation recovery of Lasso-SSC when data is noisy, or arbitrarily corrupted as long as the Frobenious norm of the additive error is bounded. We showed that the geometric gap of incoherence and inradius, which underpins the noiseless recovery guarantee in Soltanolkotabi and Candes's geometric analysis on SSC , is essentially proportional to the margin of error lassoSSC is able to tolerate. The results justified SSC's state-of-the-art performance in a number of real applications and reveal insight into what kind of problem can be robustly solved by Lasso-SSC.

In the JMLR extension of the work, we improved the bound and now we know that in the deterministic setting one can tolerate subgaussian noise of magnitude in the order of , where

is the ambient dimension of the datapoint.

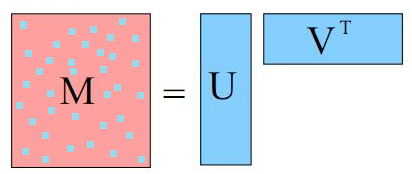

Matrix factorization with missing data

Matrix factorization is a fundamental building blocks of many popular machine learning algorithms for regression, dimension reduction, factor analysis, clustering and etc. While this approach is widely adopted and empirically sound, it is rather ungrounded in terms of theoretical analysis.

Dr. Xu and I produced a stability analysis to the formulation of factorization methods. The theory naturally explains the long observed robustness of factorization methods in collaborative recommendation system. To start from here, we hope to progress towards a comprehensive theory in the behaviors of factorization methods then design provably robust algorithms for collaborative filtering.

An actively updating blog of matrix factorization literature is here.

Practical matrix completion and matrix recovery

The recent theory of Emmanuel Candes, Terrence Tao, Ma Yi et. al about the tractable convex optimization to solve matrix completion and recovery are absolutely beautiful. Nevertheless, the real data matrix usually does not satisfy the assumptions which include incoherence, random support (of either observation mask or corruptions) and zero-noise. As an illustration, see the three feature trajectories of Oxford dinosaur sequence below:

Left: Input data with incomplete trajectories; Middle: Results of nuclear-norm based convex matrix completion; Right: Results of PARSuMi, our non-convex matrix completion algorithm.

Common practical challenges are:

- Diagonal-band-shaped data matrix

- Deterministic support of sampling or corruption

- Near degenerate subspace (diminishing singular values)

- Co-existence of noise, corruptions and missing data.

I hope to design algorithms (convex or non-convex) that works in practice by introducing additional constraints specific to the problem, such as exact rank, orthogonality, box constraint, non-negativity and smoothness.

Subspace clustering and motion segmentation

The intensive research on low-rank matrix recent years has come to a point that its subspace structure and behaviors are (almost) thoroughly understood. People have started to look at more complicated structure such as a union of multiple subspaces.

A motivating application is the segmentation of feature trajectories of multiple rigidly moving object. Such motion segmentation task is equivalent to subspace clustering because each motion is at most rank-4. The seminal SSC paper of Vidal et. al suggests that the key of segmentation is the so-called "self-expressiveness", namely, the vectors should be most easily represented by vectors from the same subspace group.

The theory of subspace clustering sees some great progress lately, featuring Soltanolkotabi and Candes' geometric analysis on SSC, Liu Guangcan's analysis of LRR and Elhamifar and Vidal's new PAMI version SSC.

However, there are still some persisting problems/open questions:

- General missing data, partial observations

- Random sparse corruptions

- Overlapping subspace

- Graph connectivity

- Stability results under noisy scenario

A recent paper titled "High-rank matrix completion" attempts to show that by assuming union-of-subspace model, the missing data of a matrix can be filled-in even if the matrix is full-rank! This is a head start towards a solution to Problem 1, but their local approach largely relies on assumption of the overwhelming number of column samples, which is by no means realistic.

Change detection for practical surveilance camera

Segmentation of meaningful foreground from background is a first step in many CV applications. When the camera is static, such detection is rather simple. In practice, however, there are graduate change of illumination, dynamic/stochastic background motion (tree branches, waves, rain, fog and etc), jittering of camera which causes severe motion blur. All these make the problem not that trivial at first glance.

We designed an algorithm that solves jittering, motion blur, and image inpainting simultaneously with a key step being 'matrix completion', the following is an illustration of its results on 'traffic' sequence:

From Left to Right: 1. aligned jittering/blurry input; 2. recovered background; 3. recovered foreground; 4. missing data used for matrix completion (detected by a run of RPCA on small images). Note that motion blur is removed and black band on top of the 3rd row (caused by image alignment) is recovered perfectly.